Missing out on some things for your new website technically can really hamper your traffic.

I often see my friends and clients ignoring small technical errors because they are SMALL and they are SCARED to work on those errors. But, they’ll cause BIG problems later in the future.

So, it’s better to work on those technical errors now than deal with them later. So, follow through the blog and learn what are the basic technical things for your new website that you should definitely check.

Incase for the older sites, we should perform periodical technical SEO audits. If you do not know how do it, I’ve written a detailed post on How to perform technical SEO audit?

Now, in this post let’s talk about the technical SEO perspective for the new websites.

Basic Technical Things You Should Look Onto For Your New Website:

HTTPS:

One of the most important things you should fix first for your new website. Cheap hosting with a non-secured hypertext transfer protocol can be expensive in terms of SEO, crawling, indexing and ranking of your website.

This being said a secure site is a happy path for the web crawlers to crawl on. You need a secure site to make users feel safe to share their information (if needed). Although all websites should get HTTPS status, eCommerce websites must have it at any cost because of transactions safety.

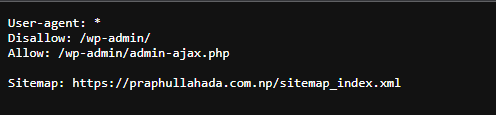

Robots.txt

Robots.txt is important for better crawling of the website. It acts as a mediator to the website. It is the bouncer that stands at the gate of a nightclub. As bouncer checks and lets, people go through who are only allowed to enter the nightclub, robots.txt lets only allowed bots crawl the website.

If a website doesn’t have robots.txt, every bot would enter the website and can hamper the crawling. So, a proper robots.txt is needed.

Robots.txt exists in the root folder of your website. SEO plugins in WordPress can automatically generate a robots.txt file for you.

Add sitemap URL in robots for better crawlability.

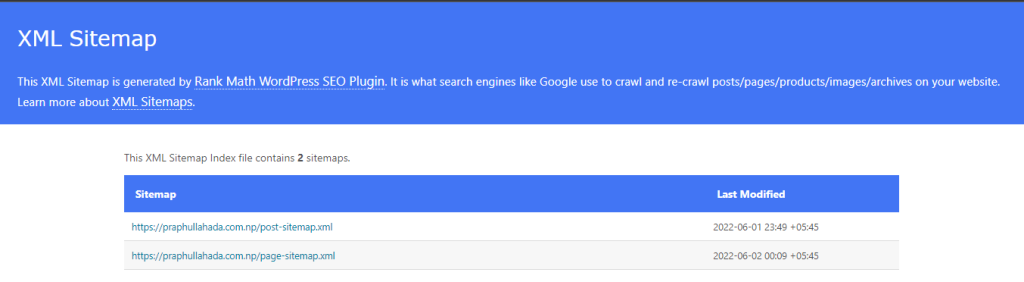

XML Sitemap

As Robots.txt is the bouncer that stands at the gate, Sitemap.xml is the one inside the nightclub that directs us to our destination. It shows the way to the important pages for bots to crawl. If there is no sitemap, bots would not be able to crawl effectively. They would just roam around without any clue.

Sitemap as the name defines is a map of the site. Be careful while putting pages on the sitemap. A proper sitemap can do wonders for web crawlers to crawl.

Seo plugins can generate sitemaps. Or, any online sitemap generator tool can be helpful.

The sitemap should be placed in the root folder of your website if you are into manual coding.

Proper URL Structure

Short and exact keyword URLs are the best in terms of user experience, web crawlers and SEO. An exact keyword URL is easy to read by bots. So, they can get the proper information about the content beforehand.

The long URLs that contain numbers are outdated and nobody recommends them now. They become a pain to SEOrs in the long term.

Be extra careful while choosing a permalink structure because the frequent change in URL is a bad signal for web bots for crawling.

Suggestion for optimized URL in terms of SEO: domainname.com/post-name

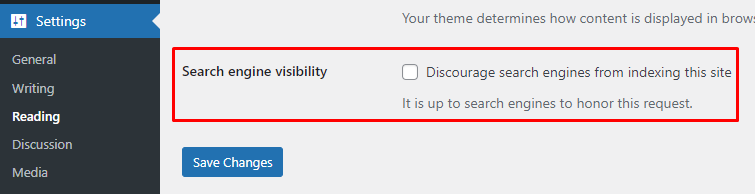

Discourage From Search Engine

A lot of WordPress website owners who are new forget to untick the discourage from the Search Engine option from the WordPress backend. This leads to the new website in no crawl at all.

This single option can be very harmful to your newly developed WordPress website.

To check and uncheck this option in WordPress, go to Dashboard > Settings > Reading.

For hardcoded websites, make sure all pages have meta robots tag of an index,follow.

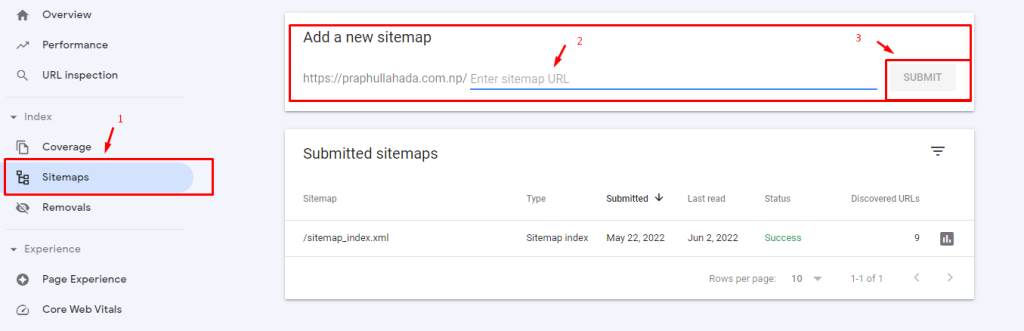

Set Up Google Search Console (GSC)

For newly developed websites, immediate tracking can give an extra boost in traffic. For the tracking, Google Search Console should be used.

Google Search Console lets you easily monitor and in some cases resolve server errors, site load issues, and security issues like hacking and malware.

You can also use it to ensure any site maintenance or adjustments you make happen smoothly with respect to search performance. The best part? IT IS FREE.

You’ll get insights into top pages, top queries, website performance, its coverage, click-through rate, valid pages, schema enhancements, manual actions and penalties, and many many more.

Make sure to add a sitemap in the sitemap section of GSC.

Set Up Google Analytics

After Google Search Console, another free traffic tracking tool from Google is Google Analytics.

Google Analytics helps you to keep a track of all the content that receives views and shares.

With this data, you can enhance the top viewed blogs so that they appeal to the customers in a more productive manner.

Google Analytics generates a breakdown of the page views each of your blog posts receives.

Don’t know how to configure Google Analytics? Visit Get Started with Analytics.

Add Schema Markup

Schema or structured data in SEO is important for feeding the right and exact information to Google. For a new website, feeding the necessary information directly can be beneficial in many aspects.

For WordPress websites, SEO plugins can generate a basic schema that is enough for a new small website. But, in case you want to give your all in the schema, I recommend going through schema.org vocabulary first and then playing around with some tools like technical SEO schema markup generator.

Basic Tools for performing Technical SEO for the new website:

Google Search Console

-To track the website performance and coverage issues

Page Speed Insights/ GT Metrix

-To check the website speed, observe website performance and recommendations

Ahrefs Webmaster Tools

-To check for technical errors on the website. The free version of Ahrefs with limited crawl and features of the Advanced Ahrefs tool

Screaming Frog

-For technical site audit and check technical errors

SEO Plugins / Online Generator Tools

-To generate robots.txt, sitemap.xml, and basic schema structure in terms of WordPress.

Online robots and sitemap generator tools to manually generate the required files

Schema Markup Creator and Validator

-To generate structured data I personally recommend the Technical SEO Markup Generator Tool.

To validate the generated schema markup, Schema org validator.

Having trouble setting up these technical things? Contact Praphulla Hada who is an SEO Expert In Nepal.

Praphulla Hada is an SEO professional who has been involved in developing and launching several successful SEO campaigns.. He has over 4 years of experience in the Search Engine Optimization industry. He has helped businesses achieve more sales and revenue through SEO.